Robots.txt is a simple yet important text file for managing how search engines crawl and index your website. This article from ProxyV6 will provide an overview of the Robots.txt file, along with detailed instructions on how to create and configure it.

What is the Robots.txt file?

The robots.txt file is a simple text file with a .txt extension, which is part of the Robots Exclusion Protocol (REP). This file specifies how search engines (or Web Robots) should crawl and index web content, ensuring that search bots access and provide that content to users effectively.

Robots.txt plays a crucial role in managing the access of search bots to your website’s content. By specifying which paths bots should not access, it helps protect private pages and optimize the data collection process, thereby increasing SEO efficiency and prioritizing important pages.

Creating a robots.txt file for your website helps you control bot access to specific areas of the site, improving site performance, user experience, and search rankings. The robots.txt file helps:

- Prevent duplicate content from appearing on the website.

- Keep some parts of the site private.

- Prevent internal search result pages from appearing on SERPs.

- Specify the location of the website’s sitemap (XML) for search engines.

- Prevent Google tools from indexing certain files on your site (images, PDFs, etc.).

- Use the Crawl-delay command to set a delay time, preventing the server from being overwhelmed when crawlers load multiple pieces of content at once.

Syntax of the robots.txt file

The syntax of the robots.txt file in SEO can be understood simply as the way you put up signs for visitors (search engine bots) to your house (website). Imagine you own a museum and want to guide visitors on which areas they should and should not enter. The robots.txt file is these instructions, in the form of a simple text file.

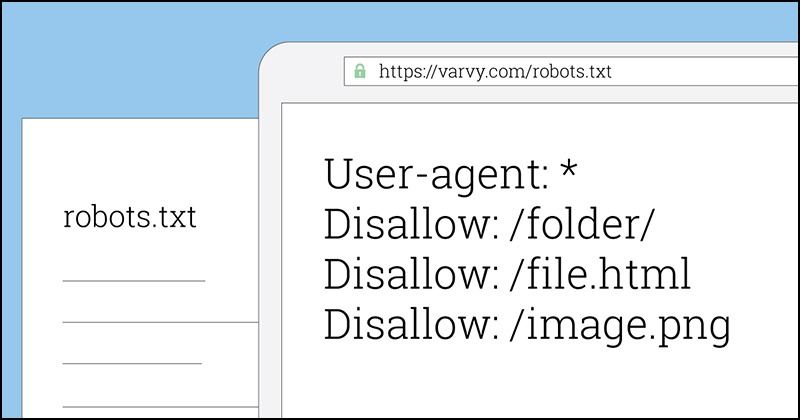

Basic syntax of the robots.txt file

- User-agent: This is the name of the bot you are giving instructions to. For example, Googlebot for Google’s bot, Bingbot for Bing’s bot.

- Disallow: This is the command to prevent the bot from accessing a specific part of the website.

- Allow: This is the command to allow the bot to access a specific part of the website (usually used when you want to allow access to a subpage within a disallowed directory).

- Sitemap: This is the path to your sitemap file, helping search bots understand the structure of the website and easily index it.

Importance in SEO

- Access control: The robots.txt file helps you control which parts of the website will be indexed by search engines. This is crucial to avoid indexing unnecessary or sensitive pages.

- Resource optimization: By guiding bots not to access unnecessary areas, you help save bandwidth and server resources.

- Improve ranking: By clearly specifying the important parts of the website that you want search bots to index, you help search engines better understand the structure and content of the website, thereby improving search rankings.

- Prevent indexing errors: If there is duplicate content or unnecessary pages being indexed, the robots.txt file helps prevent this, ensuring that only important and high-quality pages are displayed in search results.

Why do you need to create a robots.txt file?

Have you ever wondered why you need to create a robots.txt file? Imagine you are hosting a big party at your house. You want your guests to enter specific rooms and avoid others. To do this, you need to write a guide and stick it on the front door to direct guests on where they should and shouldn’t go.

The robots.txt file works similarly to that guide but for search engine bots like Googlebot. When these bots visit your website, they read the robots.txt file to know which parts of the site you want them to access and which parts you want to keep private or unnecessary for them to crawl.

For example, you might have a section on your site with duplicate information or internal search result pages that are not useful for users when searching on Google. By using the robots.txt file, you can prevent search bots from crawling and indexing these pages, making your site cleaner and easier to search.

Moreover, if you have large files such as images or PDFs that do not need to appear in search results, you can also block them using the robots.txt file. This helps save server resources and makes the search bots’ crawling process more efficient.

In summary, the robots.txt file acts like a guide for search bots, helping you control what they should and should not see on your site. This not only helps protect private information but also optimizes your website, improving user experience and increasing your ranking in search results.

Limitations of the robots.txt file

Although the robots.txt file is useful for controlling search bot access, it still has some significant limitations. Understanding these limitations will help you apply the robots.txt file more effectively in your SEO strategy.

Some search engines do not support commands in the robots.txt file

Not all search engines will support commands in the robots.txt file. This means that some search bots may still access and collect data from files you want to keep private. To better secure data, you should password-protect private files on the server.

Each data parser has its own syntax for data analysis

Usually, reputable data parsers will follow the standard commands in the robots.txt file. However, each search engine has a different way of parsing data, and some may not understand the commands in the robots.txt file. Therefore, web developers need to understand the syntax of each data collection tool on the website.

Blocked by robots.txt but Google can still index

Even if you have blocked a URL on your website with the robots.txt file, if that URL still exists, Google can still crawl and index it. If the content within the URL is not too important, you should delete the URL from the website to ensure the highest security, as the content can still appear when someone searches for it on Google.

The robots.txt file does not fully protect data

Although the robots.txt file helps control search bot traffic, it cannot fully protect your data. Search engines that do not follow standards or malicious bots can ignore this file and access your data. Therefore, data security also depends on other measures such as user authentication and access management.

Limitations in preventing duplicate content

The robots.txt file can help prevent duplicate content from appearing in search results, but it is not always effective. Search bots may still find and index duplicate content if not configured correctly. The use of meta robots tags and other measures should also be considered to address this issue.

How does the robots.txt file work?

The robots.txt file is a simple text file that guides search bots like Googlebot on how to crawl and index content on your website. This file plays an important role in controlling and optimizing the data crawl process, thereby improving the website’s search ranking. So how does the robots.txt file work?

Crawling data on the website

Search engines have two main tasks: crawling data on the website to discover content and indexing that content to meet user search requests. This process is called “Spidering,” where search bots follow links from one page to another, collecting data from billions of different web pages.

Finding and reading the robots.txt file

Before starting the spidering process, search engine bots like Google will look for the robots.txt file on the website. If found, they will read this file first to know how to crawl data on the site. The robots.txt file contains specific instructions on how bots should or should not collect data from different parts of the website.

Specific guidance for the crawling process

The robots.txt file guides search bots on which areas of the website they should crawl and which areas to avoid. For example, you can block bots from accessing admin pages or private files to protect sensitive information and optimize the data collection process.

No robots.txt file or specific instructions

If the robots.txt file does not contain any instructions for User-agents or if you do not create a robots.txt file for the website, search bots will freely collect data from all parts of the site. This can lead to unimportant pages or duplicate content being indexed, reducing SEO effectiveness.

Where is the robots.txt file located on a website?

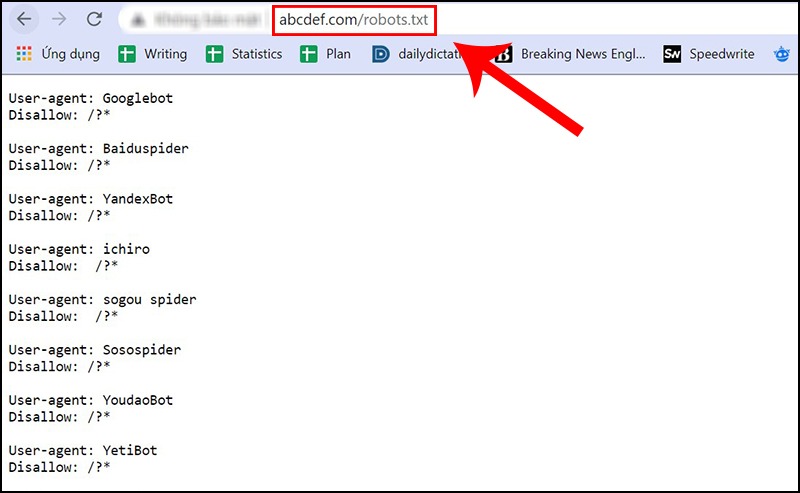

When you create a WordPress website, the system will automatically create a robots.txt file and place it right under the server’s root directory. For example, if your website address is example.com, you can access the robots.txt file via the path example.com/robots.txt. This file contains instructions like the following:

javascript

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

The part after “User-agent: *” means that this rule applies to all types of bots across the entire website. In the above example, this file specifies that bots are not allowed to access the wp-admin and wp-includes directories as they contain a lot of sensitive information.

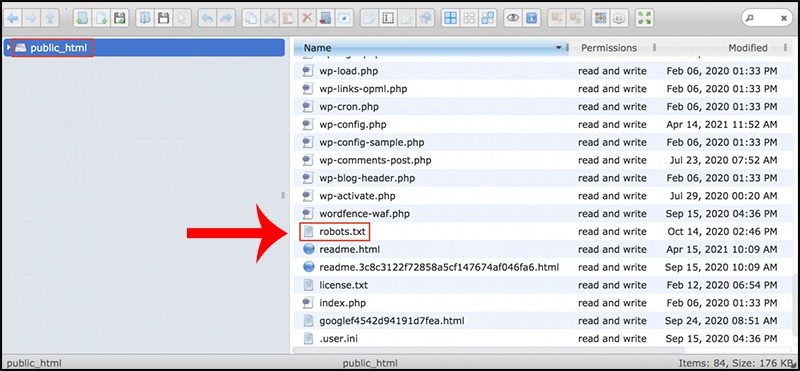

Note that this robots.txt file is a virtual file set up by WordPress by default upon installation and cannot be edited directly. Typically, the standard location of the robots.txt file in WordPress is placed in the root directory, usually called public_html or www (or the website name). To create your own robots.txt file, you need to create a new file and place it in the root directory to replace the old one.

How to check if a website has a robots.txt file?

Checking if a website has a robots.txt file is an important step to ensure that search engines can crawl data accurately. Here are some simple ways to check this:

Access directly via the browser

Open a web browser and enter your website address, followed by “/robots.txt” at the end of the URL. For example: “https://www.example.com/robots.txt“. If the file exists, the content of the robots.txt file will be displayed.

Use search engine tools

Many search engines like Google allow you to check the robots.txt file by entering the URL directly into the search bar. You can also use tools like Google Search Console to check and analyze the website’s robots.txt file.

Use online tools

There are many free online tools that allow you to check the existence and content of the robots.txt file, such as “Robots.txt Checker” or “SEO Site Checkup”.

Check through FTP or file manager

Log in to your website’s hosting server through FTP or the file manager provided by the hosting service. Look for the robots.txt file in the root directory (usually public_html or www).

These methods help you easily determine whether your website has a robots.txt file, allowing you to manage and optimize the data collection process of search engine bots effectively.

3 Simple Ways to Create a Robots.txt File for WordPress

Creating a robots.txt file for WordPress helps you control whether search engine bots are allowed or blocked from accessing certain parts of your site. Here are three simple ways to create a robots.txt file for WordPress:

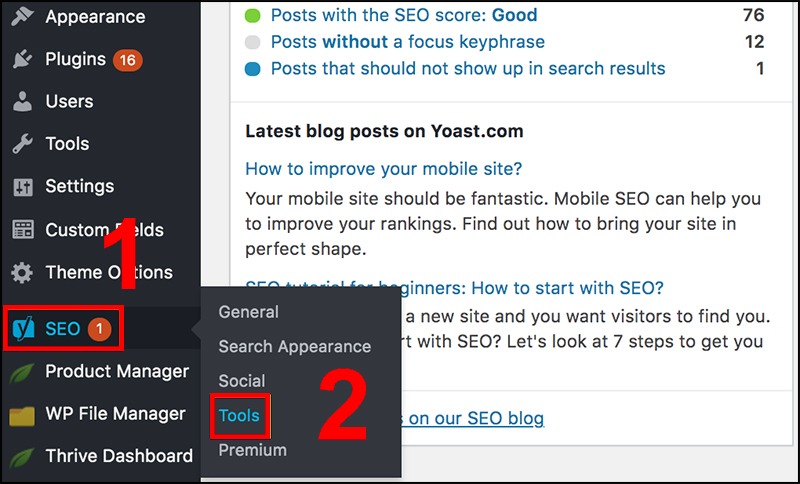

Method 1: Using Yoast SEO

- Log in to WordPress: First, log in to your WordPress admin to access the dashboard.

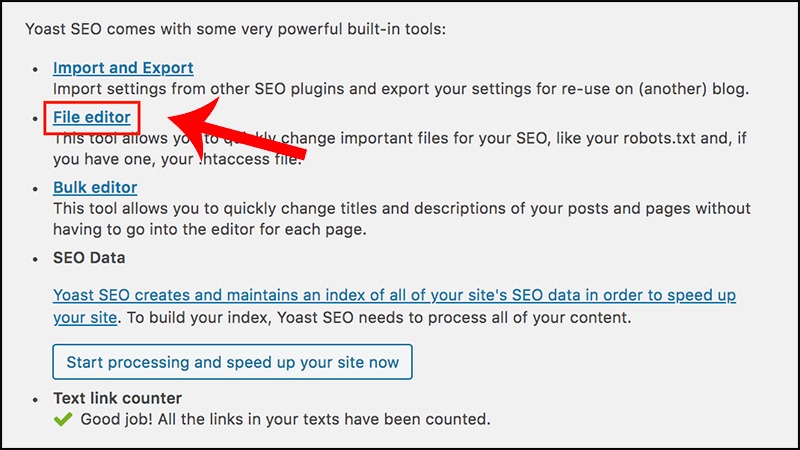

- Select SEO > Select Tools: On the left menu, select “SEO” and then select “Tools”.

3. Select File editor: In the Tools page, select “File editor”. Here, you will see the section to edit the robots.txt and .htaccess files. You can create and edit the robots.txt file here.

Method 2: Using the All in One SEO Plugin

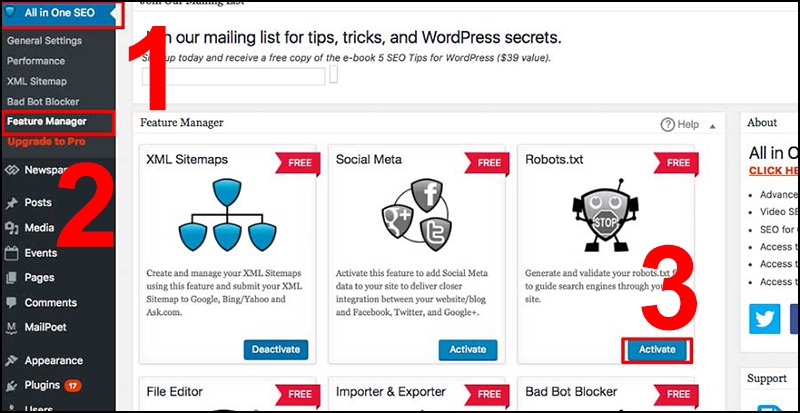

- Access the Plugin All in One SEO Pack interface: If you haven’t installed the plugin, you can download and install it from the WordPress Plugin repository.

- Select All in One SEO > Select Feature Manager > Click Activate for Robots.txt: In the main interface of the plugin, select “All in One SEO”, then select “Feature Manager” and activate the “Robots.txt” feature.

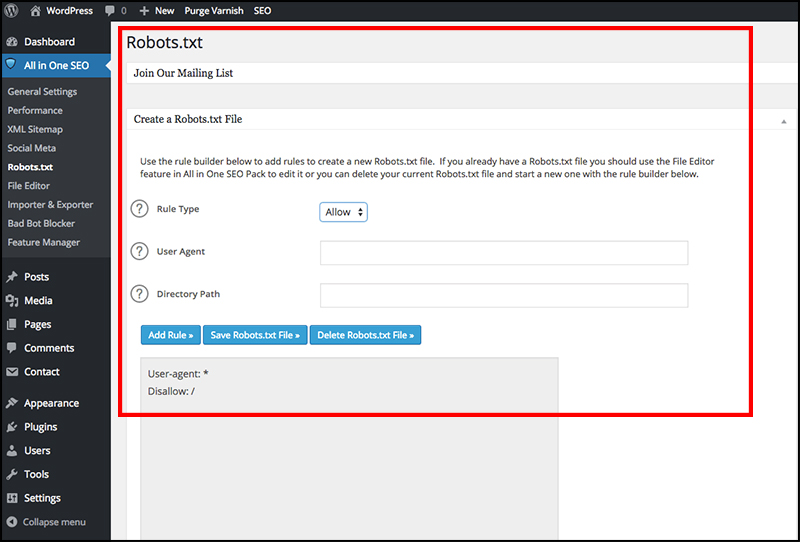

3. Create and adjust the robots.txt file: After activation, you can create and adjust the robots.txt file for your WordPress site. Note that All in One SEO may obscure some information in the robots.txt file to limit potential damage to the website.

Method 3: Create and upload the robots.txt file via FTP

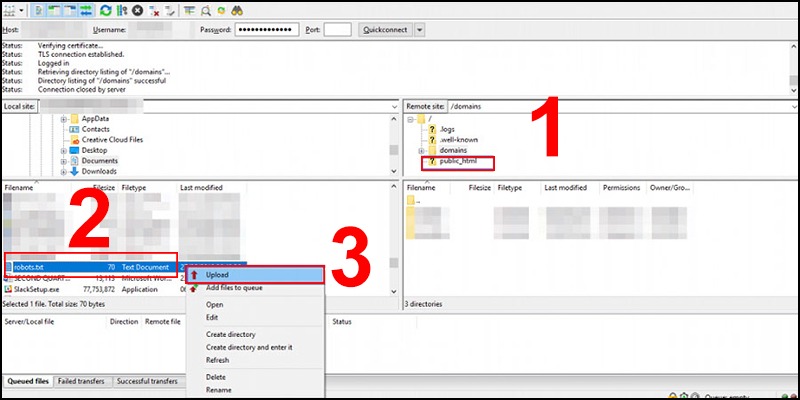

- Create a robots.txt file using Notepad or Textedit: Open Notepad (Windows) or Textedit (Mac) and create a robots.txt file with the directives you want.

- Open FTP and upload the file: Using an FTP software (such as FileZilla), access the root directory of the website (usually public_html or www). Upload the robots.txt file you just created to this directory.

Creating a robots.txt file not only helps you better control search engine bots but also helps optimize website performance and security.

Some notes when using the robots.txt file

Using the robots.txt file is an effective way to control how search engine bots crawl and index content on your website. However, to ensure effectiveness and avoid unwanted issues, you need to note some important points:

- Ensure correct syntax: A small syntax error can cause the bot not to understand your directives correctly, leading to issues in crawling and indexing content.

- Do not block necessary resources: Do not block resources like CSS, JS necessary for proper website display. If these resources are blocked, Google may have difficulty understanding the structure and content of your site.

- Check after editing: Whenever you edit the robots.txt file, check it again using tools like Google Search Console to ensure that the bots are following the new directives.

- Use the Disallow command cautiously: Only use the Disallow command for content you are sure you don’t want bots to access. Blocking important content by mistake can prevent it from being indexed.

- Specify sitemap location: Don’t forget to specify the location of the sitemap in the robots.txt file so that search engine bots can easily find and efficiently collect your data.

- Do not rely solely on robots.txt for security: Robots.txt only helps control bot access but does not protect data from unauthorized access. To secure information, use other security measures such as user authentication and access control.

- Regularly update and review: Regularly update your robots.txt file to reflect any changes on your website. Periodically review its effectiveness and make adjustments as necessary.

- Test in a staging environment before applying: If you are unsure about the directives in the robots.txt file, test in a staging environment before officially applying them to the website to avoid unwanted issues.

By noting and implementing these points, you will maximize the effectiveness of the robots.txt file, helping optimize your website for search engines and protecting important content.

Through this article, you can see that the robots.txt file is an important tool that helps you control how search engine bots crawl and index content on your website. By using the directives correctly, you can optimize the data collection process, protect sensitive information, and improve your website’s SEO performance. However, note that the robots.txt file cannot completely replace other security measures. Therefore, understanding and correctly applying the directives in the robots.txt file is crucial.

If you want to stay updated with more useful knowledge about SEO and web management tools, follow the Proxyv6 website. We always provide the latest and most valuable information to help you enhance your website’s performance. Don’t miss out!

How to ensure the syntax in the robots.txt file is correct and does not affect Google's indexing?

This question helps readers understand the importance of correct syntax in the robots.txt file and methods to check and fix syntax errors

What resources should not be blocked in the robots.txt file to avoid affecting the display and functionality of the website?

This question emphasizes the need to be cautious when using the Disallow command and highlights the essential resources that should not be blocked.

How to check the effectiveness of the robots.txt file after making changes?

This question guides readers to use tools like Google Search Console to check and ensure that the directives in the robots.txt file are working correctly.

Why should you not rely entirely on the robots.txt file to secure content on the website?

This question helps readers understand the limitations of the robots.txt file in terms of security and emphasizes the importance of additional security measures.

How to specify the location of the sitemap in the robots.txt file and why is this important?

This question provides information on how to specify the sitemap location in the robots.txt file and explains the importance of this for efficient data collection by search engine bots.